2. System Architecture and Configuration

- 2.1 Processor architecture

- 2.2 Building block architecture

- 2.3 Memory architecture

- 2.4 Interconnect

- 2.5 I/O subsystem architecture

- 2.6 Available file systems

- 2.7 Operating system (CLE)

This section provides an overview of the Cray XE architecture and configurations. Cray XE systems generally consist of two types of nodes: service nodes and compute nodes. Service nodes are used for a variety of tasks on the system including acting as login nodes.

2.1 Processor architecture

2.1.1 Compute node hardware

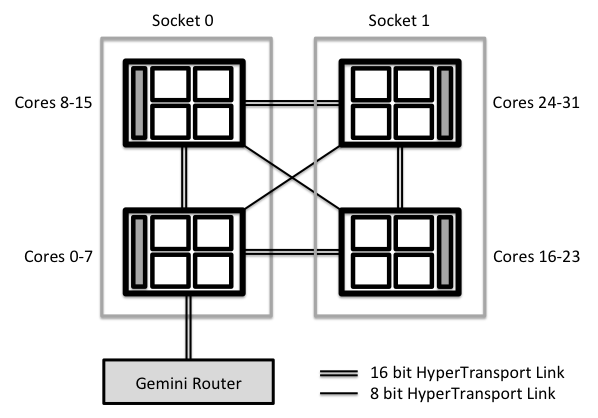

HECToR Cray XE compute nodes contain two 16-core 2.3 GHz AMD Interlagos Opteron processors. These individual processors are connected to each other; to main memory and to the Gemini router by HyperTransport links (see Figure 2.1 below)

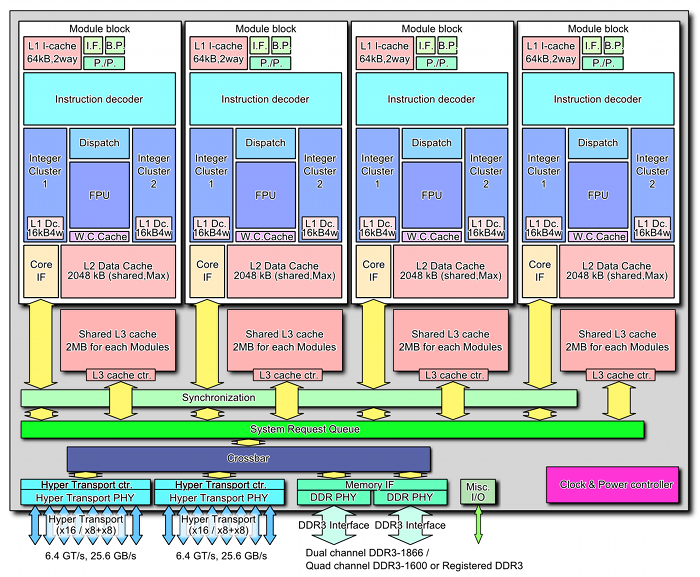

Each Opteron processor consists of two NUMA regions (or dies) each containing 8 cores. Each NUMA region is made up of 4 modules each of which has two cores and which also contains a shared floating point execution unit (see Figure 2.2 below).

Figure 2.1: Overview of a Cray XE compute node using the AMD Magny-Cours processors with 24 cores in total per node (image courtesy of Cray Inc.).

The shared floating point unit (FPU) in a module is the major difference from previous versions of the Opteron processor. This unit consists of two 128-bit pipelines can be combined into a 256-bit pipeline. Hence, the module FPU is able to execute either a single 256-bit AVX vector instruction or two 128-bit SSE/FMA4 vector instructions per instruction cycle. The FPU also introduces an additional 256-bit fused multiply/add vector instruction which can improve the performance of these operations on the processor. Each 128-bit pipeline can operate on two double precision floating point numbers per clock cycle and the combined 256-bit pipeline can operate on 4 double precision floating point numbers per clock cycle.

One of the keys to getting good performance out of the Bulldozer architecture is writing your code in such a way that the compiler can make use of the vector-type, floating point operations available on the processor. There are a number of different vector-type operations available that all execute in a similar manner: SSE (Streaming SIMD Extensions) Instructions; AVX (Advanced Vector eXtensions) Instructions and FMA4 (Fused Multiply-Add with 4 operands) Instructions. These instructions can use the FPU described above to operate on multiple floating point numbers simultaneously as long as the numbers are contiguous in memory. SSE instructions contain a number of different operations (for example: arithmetic, comparison, type conversion) that operate on two operands in 128-bit registers. AVX instructions expand SSE to allow operations to operate on three operands and on a data path expanded from 128- to 256-bits - this is especially important in the Bulldozer architecture as a core can have exclusive access to a 256-bit floating point pipeline. The FMA4 instructions are a further expansion to SSE instructions that allow a fused multiply-add operation on 4 operands - these have the potenital to greatly increase performance for simulation codes. Both the AVX and FMA4 instruction sets are relatively new innovations and it may take some time before they are effectively supported by compilers.

Figure 2.2: Bulldozer architecture overview (image courtesy of Wikipedia).

2.1.2 Service node hardware

The service nodes on HECToR have a single dual-core 2.6GHz Opteron processor and 8 GB of RAM.

2.2 Building block architecture

HECToR consists of 30 cabinets and a total of 2816 compute nodes. As there are 32 cores per compute node this leads to a total of 90,112 cores available for users. The theoretical peak performance of the system is greater than 800 TFlops.

There are 24 service nodes in the HECToR system.

2.3 Memory architecture

All the processors on the node share 32 GB of DDR3 memory. The total memory on HECToR is 90 TB.

All 4 modules (8 cores) within a NUMA region share an 8MB L3 cache (6MB data) with each module having a 2MB L2 data cache shared between the two cores. Each core has its own 16 kB data cache.

Each node has a main memory bandwidth of 85.3GB/s (42.7GB/s per socket, 5.3GB/s per module, 2.7GB/s per core).

2.4 Interconnect

Cray XE systems use the Cray Gemini interconnect which links all the compute nodes in a 3D torus. Every two XE compute nodes share a Gemini router which is connected to the processors and main memory via their HyperTransport links.

There are 10 links from each Gemini router on to the high-performance network (HPN); the peak bi-directional bandwidth of each link is 8 GB/s and the latency is around 1-1.5 microseconds.

Further details can be found at the following links:

- The Germini interconnect

- Workload Management and Application Placement for the CLE

- The HECToR's Gemini interconect

2.5 I/O subsystem architecture

HECToR has 12 I/O nodes to provide the connection between the machine and the data storage. Each I/O node is fully integrated into the toroidal communication network of the machine via their own Gemini chips. They are connected to the high-performance esFS external data storage via Infiniband fibre.

2.6 Available file systems

The detailed file system information for HECToR can be found at: Resource Management (HECToR User Guide)

2.6.1 "home"

Cray XE systems use two separate file systems: the "home" filesystem and the "work" filesystem. The "home" filesystem is backed up and can be used for the critical files and small permanet datasets. It cannot be accessed from the compute nodes, so all files required for running a job on the compute nodes must be present in the "work" filesystem. It should also be noted that the "home" filesystem is not designed for the long term storage of large sets of results. For long term storage, the archive facility should be used.

2.6.2 "work"

The "work" filesystem on Cray XE systems is a Lustre distributed parallel file system. It is the only filesystem that can be accessed from the compute nodes. Thus the parallel executable(s) and all input data files must be present on the "work" directory before running; and all output files generated during the execution on compute nodes must be wrtten to the "work" filesystem. There is no separate backup of data on the "work" filesystem.

2.6.3 Archive

HECToR users should use the archive facility for the long term storage.

See more info about the Archive on HECToR:

2.7 Operating system (CLE)

The operating system on Cray XE is the Cray Linux Environment (CLE) which in turn is based on SuSE Linux. CLE consists of two components: CLE and Compute Node Linux (CNL).

The service nodes of a Cray XE system (for example, the frontend nodes) run the a full-featured version of Linux.

The compute nodes of a Cray XE system run CNL. CNL is a stripped-down version of Linux that has been extensively modified to reduce both the memory footprint of the OS and also the amount of variation in compute node performance due to OS overhead.

2.7.1 Cluster Compatibility Mode (CCM)

If you require full-featured Linux on the compute nodes of an Cray XE system (for example, to run a ISV code) you may be able to employ Cluster Compatibility Mode (CCM). Please contact the HECToR Helpdesk for more information.

>>>>>>> 1.12