2. HECToR Hardware

This page provides basic details on the hardware. More detailed information is available in the HECToR Best Practice Guide.

2.1 Overview

The HECToR service consists of a Cray XE6 supercomputer (Phase 3), a high-performance parallel file system (esFS Lustre), a GPU testbed machine and an archive facility.

2.2 Phase 3 (Cray XE6)

The main HECToR facility, known as phase 3, is a Cray XE6 system. This offers a total of 2816 XE6 compute nodes. Each compute node contains two AMD 2.3 GHz 16-core processors giving a total of 90,112 cores; offering a theoretical peak performance of over 800 Tflops. There is presently 32 GB of main memory available per node, which is shared between its thirty-two cores, the total memory is 90 TB. The processors are connected with a high-bandwidth interconnect using Cray Gemini communication chips. The Gemini chips are arranged on a 3 dimensional torus.

The phase 3 login nodes which host interactive use and the serial queues each have 1 dual-core 2.6GHz AMD Opteron processor with 8GB RAM.

2.2.1 Communication Networks

The HECToR phase 3 communications network uses Cray Gemini communication chips. Every two XE nodes share one Gemini communication chip, connected directly into their HyperTransport links. This allows for direct memory access (DMA) of the processors' memory by the communication chip.

The Gemini chips are arranged on a 3 dimensional torus with 10 links from each router on to the high-performance network. The peak bi-directional bandwidth of each link is 8 GB/s and the latency is around 1-1.5μs.

2.3 Data I/O and storage

The phase 3 system has 12 I/O nodes, providing the connection between the machine and its data storage. Each of the I/O nodes is fully integrated into the toroidal communication network of the machine via their own Gemini chips. They are connected to the high-performance esFS external data storage via Infiniband fibre.

There are currently over 1 PB high-performance RAID disks in the shared, external filesystem. The service deploys the Lustre distributed parallel file system to access the disks.

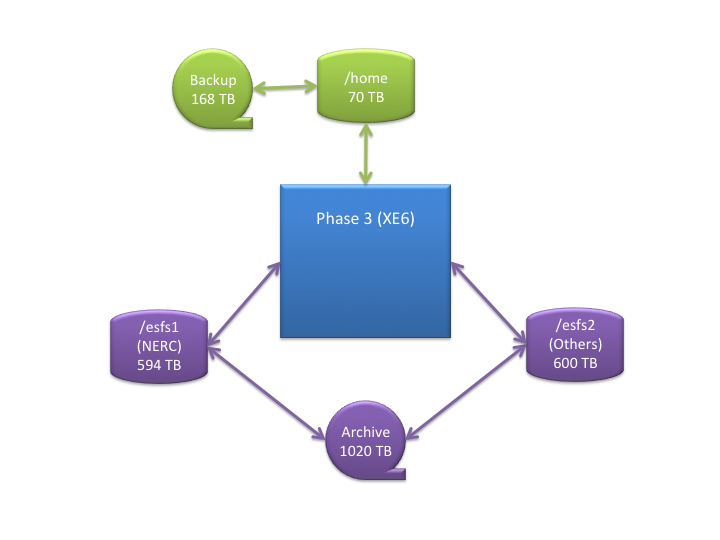

The phase 3 system has a 70 TB home filesystem held on BlueArc Titan 2200 servers. Data from the home filesystem are backed up initially to a 168 TB MAID (Massive Array of Idle Disks) disk space, from which they are staged to a tape system as required.

An archive facility is accessible from the high-performance esFS external filesystem. The archive has 1.02 PB of storage available.

The current filesystem layout is summarised in the diagram below: