|

(6.4) |

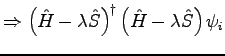

Consider the following:

Equation 6.4 is simply a modified eigenvalue equation

that shares the same eigenstates as the original Schrödinger-like

equation but with modified eigenvalues. However the lowest eigenvalue

of this modified equation is not the lowest energy eigenvalue

![]() , but the eigenstate whose eigenvalue is closest to

, but the eigenstate whose eigenvalue is closest to

![]() . Furthermore, the eigenstate with eigenvalue

. Furthermore, the eigenstate with eigenvalue ![]() is

now at a local minimum, regardless of whether it has the globally

lowest eigenvalue or not.

is

now at a local minimum, regardless of whether it has the globally

lowest eigenvalue or not.

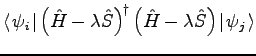

Consider now the usual Castep algorithm outlined in section

6.2, for the special case where each block

contains only a single eigenstate. If we omit the orthonormalisation

step then every approximate eigenstate will tend to converge to the

lowest eigenstate, but if we first replace the augmented subspace

matrix

| (6.5) |

|

|||

If we choose

![]() then this algorithm will

automatically choose the best approximate eigenstate closest to the

initial approximation. In this case the states in the inner product

are the band residuals

then this algorithm will

automatically choose the best approximate eigenstate closest to the

initial approximation. In this case the states in the inner product

are the band residuals ![]() ,

,

| (6.6) |

The only difference between our scheme and the usual RMM-DIIS method

is the choice of state to add to our block's subspace. In the usual

Castep scheme we add a state ![]() given by

given by

| (6.7) |

In the RMM-DIIS scheme we search along this direction for the minimal

residual norm, i.e. find a new trial eigenstate along the direction

![]() , and add that trial eigenstate to the subspace instead of

, and add that trial eigenstate to the subspace instead of

![]() . The schemes are so similar that we implemented both in Castep.

. The schemes are so similar that we implemented both in Castep.

After all of the eigenstates have been updated, a global orthonormalisation is still necessary before a new density can be constructed. However this single orthonormalisation per SCF cycles is considerably fewer than the usual Castep scheme where three or four orthonormalisations are per SCF cycle are common.

Both schemes converge to the closest eigenstate to the current trial eigenstate, so it is important that we have a reasonable set of trial eigenstates before using these schemes. We opted to use the usual Castep optimisation for four SCF cycles before switching to one of the new schemes.