CASCADE: Cloud System Resolving Modelling of the Tropical Atmosphere

G. M. S. Lister, L. Steenman-Clark, National Centre for Atmospheric Science – Computational Modelling Services

S. W. Woolnough, Dept of Meteorology, University of Reading

Strong surface heating by the sun in the tropics is communicated to the atmosphere through the process of convection; this is responsible for significant amounts of tropical rainfall and plays a pivotal role in the heat and moisture budgets of the tropical climate system. Convection releases latent heat which drives the tropical circulation and controls tropical sea-surface temperatures and, through interaction with atmospheric wave activity, contributes to shaping the global climate. Convection manifests itself through scales from the size of an individual cloud through the mesoscale and up to the synoptic and planetary scales, so in order to represent these scale interactions in a model, it must cover a sizeable fraction of the Earth in order to capture the planetary/synoptic, yet be able to resolve km-sized single clouds. The CASCADE project (NERC Reference: NE/E00525X/1) was devised to investigate these phenomena by means of very large high-resolution simulations.

CASCADE uses as its main tool the Met. Office Unified Model (UM). This software system is used by academic researchers and meteorological services worldwide in a multitude of configurations to model a wide ranging set of processes from Earth-System simulations to operational weather forecasts. The National Centre for Atmospheric Science - Computational Modelling Services group (NCAS-CMS), based at the University of Reading, have ported the software to HECToR and provide support for several versions of it.

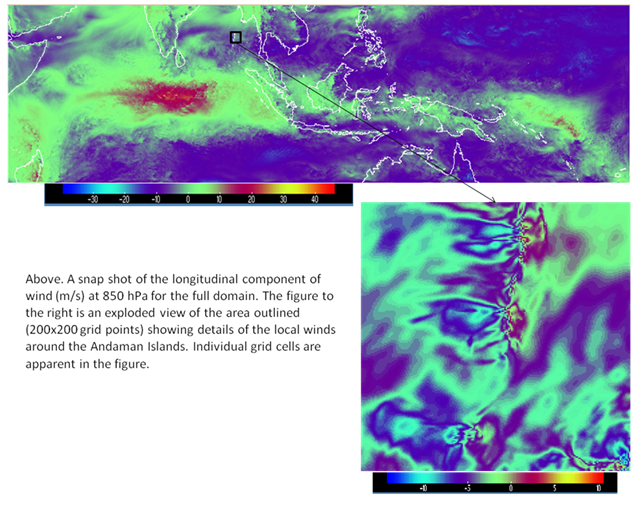

Early CASCADE integrations at 40km, 12km, and 4km horizontal grid resolution have run successfully on HECToR using version 7.1 of the UM. The largest of these relatively low-resolution simulations covers the Indian Ocean, Maritime Continent, and extends into the Western Pacific. At 4km resolution, this domain, spanning 140? longitude, 40? latitude with70 vertical levels, comprises ~290 million grid points and generates ~4TB of diagnostic model output per model day. I/O expense represented a significant portion of the overall cost of these integrations in which all output was directed through a single processor, and it soon became apparent that the desired 1.5km resolution Indian Ocean simulation would not be feasible without adopting a modified I/O strategy. In collaboration with Cray (CoE [1]), an asynchronous I/O model was implemented in version 6.1 of the UM and has now been ported to version 7.6 allowing us to run the model on the domain described above at 1.5km resolution.

The model has 10222x2890x70 (~2 billion) grid points and generates ~12TB of output per model day. Eight I/O servers run on four under-populated XE6 nodes in order to satisfy their large memory requirements, and the model itself runs on up to 3072 processors, again running under populated to secure its required memory. The five-day simulation ran for close to 400hrs of wallclock time. Some initial data processing is performed at HECToR using the NERC lms (large-memory server) machine and processed data is transferred to Reading by GridFTP for analysis on local machines.

References

- [1] Cray CoE Science Delivery Programme: HiGEM Decadal Predictions